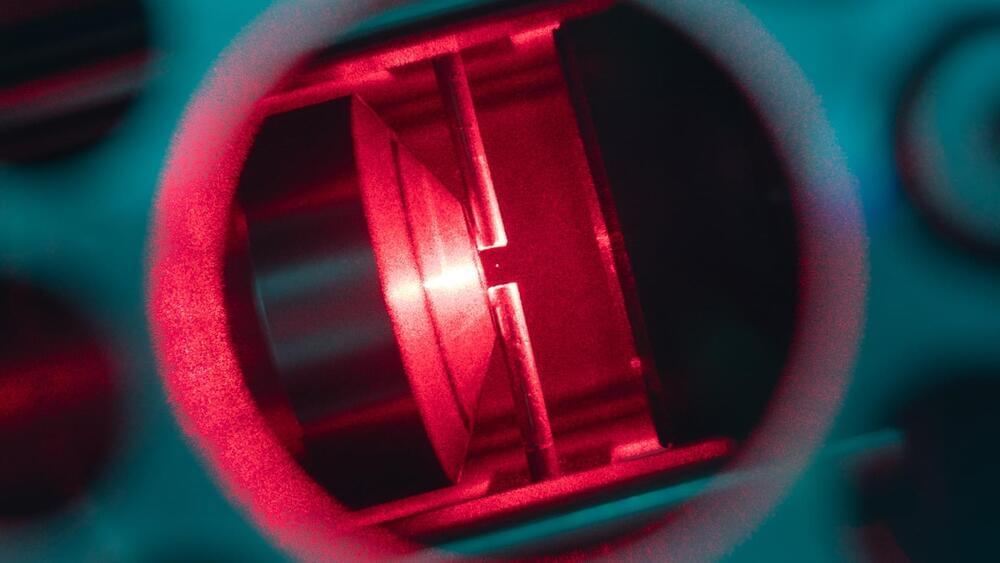

Free-space optical communication links promise better security and increase bandwidths but can suffer from noise in daylight. This is particularly detrimental in quantum communications where current mitigation techniques, such as spectral, temporal, and spatial filtering, are not yet sufficient to make daylight tolerable for satellite quantum key distribution (SatQKD). As all current SatQKD systems are polarization-encoded, polarization filtering has not been investigated. However, by using time-and phase-encoded SatQKD, it is possible to filter in polarization in addition to existing domains. Scattered daylight can be more than 90% polarized in the visible band, yielding a reduction in detected daylight between 3 dB and 13 dB, such that polarization filtering can reduce the brightness of 780 nm daylight to below the unfiltered equivalent at 1,550 nm. Simulations indicate that polarization filtering increases the secure key rate and allows for SatQKD to be performed at dawn and dusk. This could open the way for daylight SatQKD utilizing shorter near-infrared wavelengths and retaining their benefits.

Published by Optica Publishing Group under the terms of the Creative Commons Attribution 4.0 License. Further distribution of this work must maintain attribution to the author(s) and the published article’s title, journal citation, and DOI.