! Elon Musk seems to think that the Tesla Bot will take over many of the boring, repetitive, and dangerous jobs that are fundamental to our economy. Elon believes the Tesla Bot will eventually take over the Tesla vehicles as the company’s primary source of revenue…

Category: employment

Whar may happen when the first truly smart robots appear, based on brain emulations or ems. Scan a human brain, then run a model with the same connections on a fast computer, and you have a robot brain, but recognizably human.

Train them to do some job and copy it a million times: an army of workers is at your disposal. When they can be made cheaply.

within perhaps a century, they will displace humans in most jobs.

In this new economic era, the world economy may double in size every few weeks.

Applying decades of expertise in physics, computer science, and economics.

and use ofstandard theories indicate a detailed picture of a world dominated by ems.

Associate Professor of Economics, and received his Ph.D in 1997 in social sciences from Caltech. Joined George Mason’s economics faculty in 1999 after completing a two year post-doc at U.C Berkely. His major fields of interest include health policy, regulation, and formal political theory. Recent book: The Age of Em: Work, Love and Life When Robots Rule The Earth. Oxford University Press, 2016.

This talk was given at a TEDx event using the TED conference format but independently organized by a local community.

This episode is sponsored by Indeed. Stop struggling to get your job post seen on other job sites. Indeed’s Sponsored Jobs help you stand out and hire fast. With Sponsored Jobs your post jumps to the top of the page for your relevant candidates, so you can reach the people you want faster.

Get a $75 Sponsored Job Credit to boost your job’s visibility! Claim your offer now: https://www.indeed.com/EYEONAI

In this episode, renowned AI researcher Pedro Domingos, author of The Master Algorithm, takes us deep into the world of Connectionism—the AI tribe behind neural networks and the deep learning revolution.

From the birth of neural networks in the 1940s to the explosive rise of transformers and ChatGPT, Pedro unpacks the history, breakthroughs, and limitations of connectionist AI. Along the way, he explores how supervised learning continues to quietly power today’s most impressive AI systems—and why reinforcement learning and unsupervised learning are still lagging behind.

We also dive into:

The tribal war between Connectionists and Symbolists.

The surprising origins of Backpropagation.

How transformers redefined machine translation.

Why GANs and generative models exploded (and then faded)

The myth of modern reinforcement learning (DeepSeek, RLHF, etc.)

The danger of AI research narrowing too soon around one dominant approach.

Whether you’re an AI enthusiast, a machine learning practitioner, or just curious about where intelligence is headed, this episode offers a rare deep dive into the ideological foundations of AI—and what’s coming next.

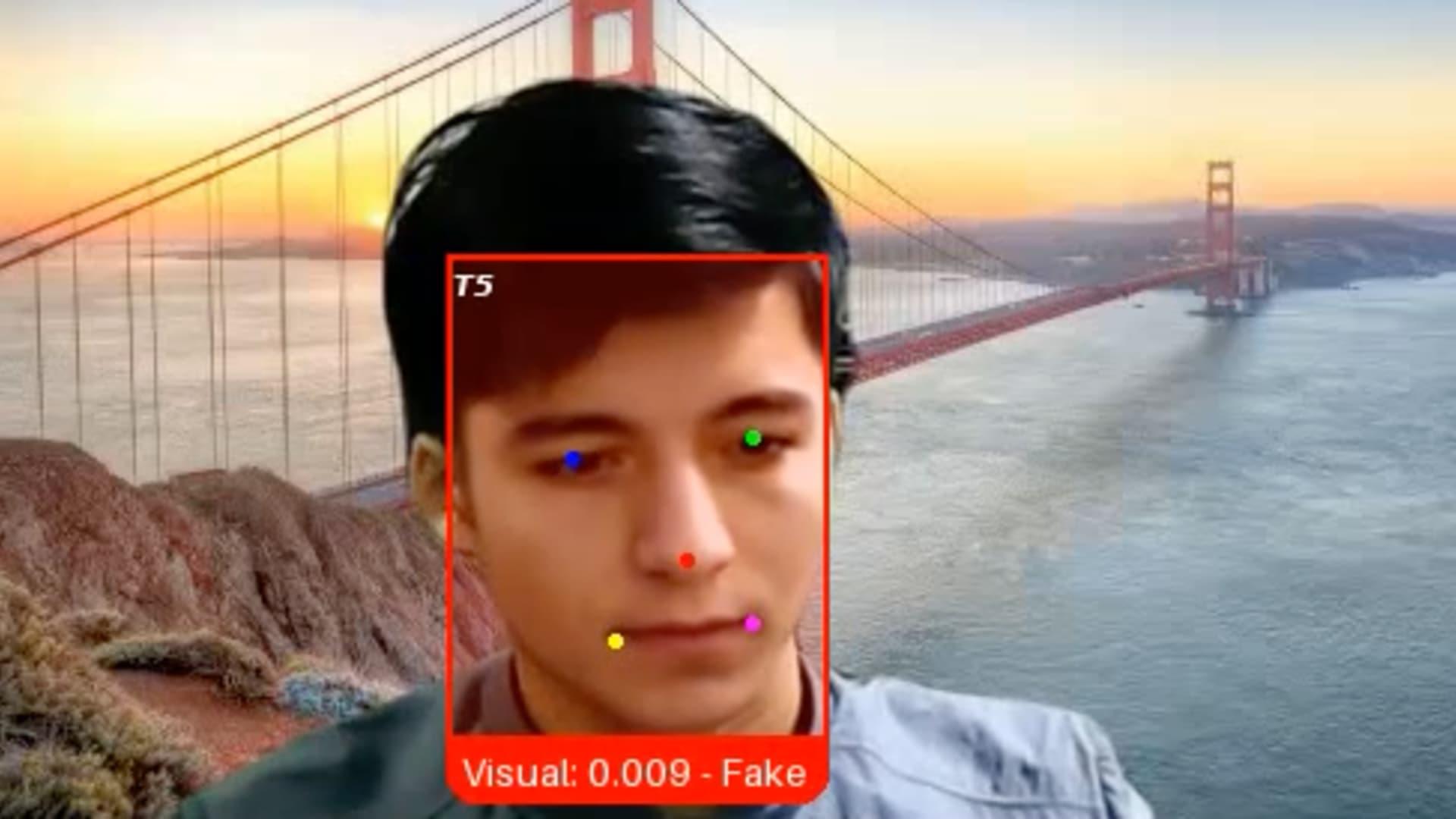

That’s because the candidate, whom the firm has since dubbed “Ivan X,” was a scammer using deepfake software and other generative AI tools in a bid to get hired by the tech company, said Pindrop CEO and co-founder Vijay Balasubramaniyan.

“Gen AI has blurred the line between what it is to be human and what it means to be machine,” Balasubramaniyan said. “What we’re seeing is that individuals are using these fake identities and fake faces and fake voices to secure employment, even sometimes going so far as doing a face swap with another individual who shows up for the job.”

Companies have long fought off attacks from hackers hoping to exploit vulnerabilities in their software, employees or vendors. Now, another threat has emerged: Job candidates who aren’t who they say they are, wielding AI tools to fabricate photo IDs, generate employment histories and provide answers during interviews.

The company was founded to sell automation software. That entire market is being upended by AI, particularly agentic AI, which is supposed to click around on the internet and do things for you.

After stepping down from and returning to the CEO role, Daniel Dines is preparing for the agentic AI era.

Artificial Intelligence is evolving rapidly, bringing us closer to the Singularity—a future where AI surpasses human intelligence. This shift could transform every aspect of life, from jobs to technology, creating both exciting possibilities and significant risks. As AI continues to advance at an unprecedented pace, understanding its impact on society is more crucial than ever.

🔍 Key Topics Covered:

The rapid evolution of AI and its connection to the looming Singularity, where machines may surpass human intelligence.

How AI could reshape industries, jobs, and even human life as we know it.

The potential risks of uncontrolled AI growth, including the rise of misinformation, biased outcomes, and the threat of AI-designed chemical weapons.

The need for a global governance framework to regulate and monitor AI advancements.

The ethical and philosophical questions surrounding AI’s role in society, including its impact on human consciousness and labor.

🎥 What You’ll Learn:

The rapid advancement of artificial intelligence and its potential to reach the Singularity sooner than expected.

How AI systems like neural networks and symbolic systems impact modern technology and the dangers they pose when left unchecked.

The role AI could play in jobs, governance, and the potential for global cooperation to ensure safe AI development.

Insight into real-world concerns such as disinformation, biased AI systems, and even the possibility of AI leading to catastrophic societal changes.

📊 Why This Matters:

These developments highlight the critical need for responsible AI governance as the technology progresses toward potentially surpassing human intelligence. Understanding the rapid growth of AI and its implications helps us prepare for the future, where machines could fundamentally change society. Whether you’re interested in technology, philosophy, or the future of work, this content offers an in-depth look at the powerful impact AI will have on the world.

*DISCLAIMER*:

The content presented is for informational and entertainment purposes, offering insights into the future of AI based on current trends and technological research. The creators are not AI experts or legal professionals, and the information should not be taken as professional advice. Viewer discretion is advised due to the speculative nature of the topics discussed. The views expressed are those of the content creator and do not necessarily represent any affiliated individuals or organizations.

#ai.

Elon Musk envisions a future where automation and AI could transform society by creating abundance and new job opportunities, while also posing challenges such as job displacement, wealth concentration, and the need for innovative solutions like universal basic income ## Questions to inspire discussion ## Income Opportunities in the Age of Abundance.

🤖 Q: How can I profit from owning assets in an abundant future? A: Rent out assets like bots, cars, and homes as a major income source, creating new job opportunities in asset management and maintenance.

🎨 Q: What industries will thrive in a post-scarcity world? A: Bespoke industries like Etsy will flourish as people seek custom-made products from human artisans, creating new job opportunities for unique, high-quality craftsmanship. ## Lifestyle Changes and Affordability.

💰 Q: How will abundance affect the cost of living? A: Middle-class living becomes possible on **$20,000/year instead of **$100,000/year, reducing costs of energy, transportation, homes, groceries, and making luxuries more accessible.

✈️ Q: Will travel become more affordable in an abundant future? A: Vacation land and travel become more accessible as abundance reduces costs of travel and accommodations, creating new job opportunities in the travel industry. ## Entertainment and Sports.

🏆 Q: How will abundance impact professional sports and gaming? A: Professional athletes and gamers will gain popularity and lucrative opportunities as more people afford tickets and subscriptions, creating new job opportunities in competitive fields. ## Economic Considerations.

Historically, No Tech Improvement Has Affected Human Jobs. It’s the people who were smart enough to understand the paradigm shift and develop new skills to be better productive.

In this insightful conversation with OpenAI’s CPO Kevin Weil, we discuss the rapid acceleration of AI and its implications. Kevin makes the shocking prediction that coding will be fully automated THIS YEAR, not by 2027, as others suggest. He explains how OpenAI’s models are already ranking among the world’s top programmers and shares his thoughts on Deep Research, GPT-4.5’s human-like qualities, the future of jobs, and the timeline for GPT-5. Don’t miss Kevin’s billion-dollar startup idea and his vision for how AI will transform education and democratize software creation.

00:00 — Summary.

01:21 — Introduction.

03:20 — Discussion on OpenAI being both a research and product company.

11:05 — Timeline for GPT-5

11:38 — AI model commoditization and maintaining competitive advantage.

15:09 — Deep Research capabilities.

24:22 — Coding automation prediction: THIS YEAR

30:05 — AI in creative work and design.

36:43 — Future of programming and engineers.

38:32 — Will AI create new job categories?

40:58 — Billion-dollar AI startup ideas.

46:27 — Voice interfaces and robotics.

49:28 — Closing thoughts.