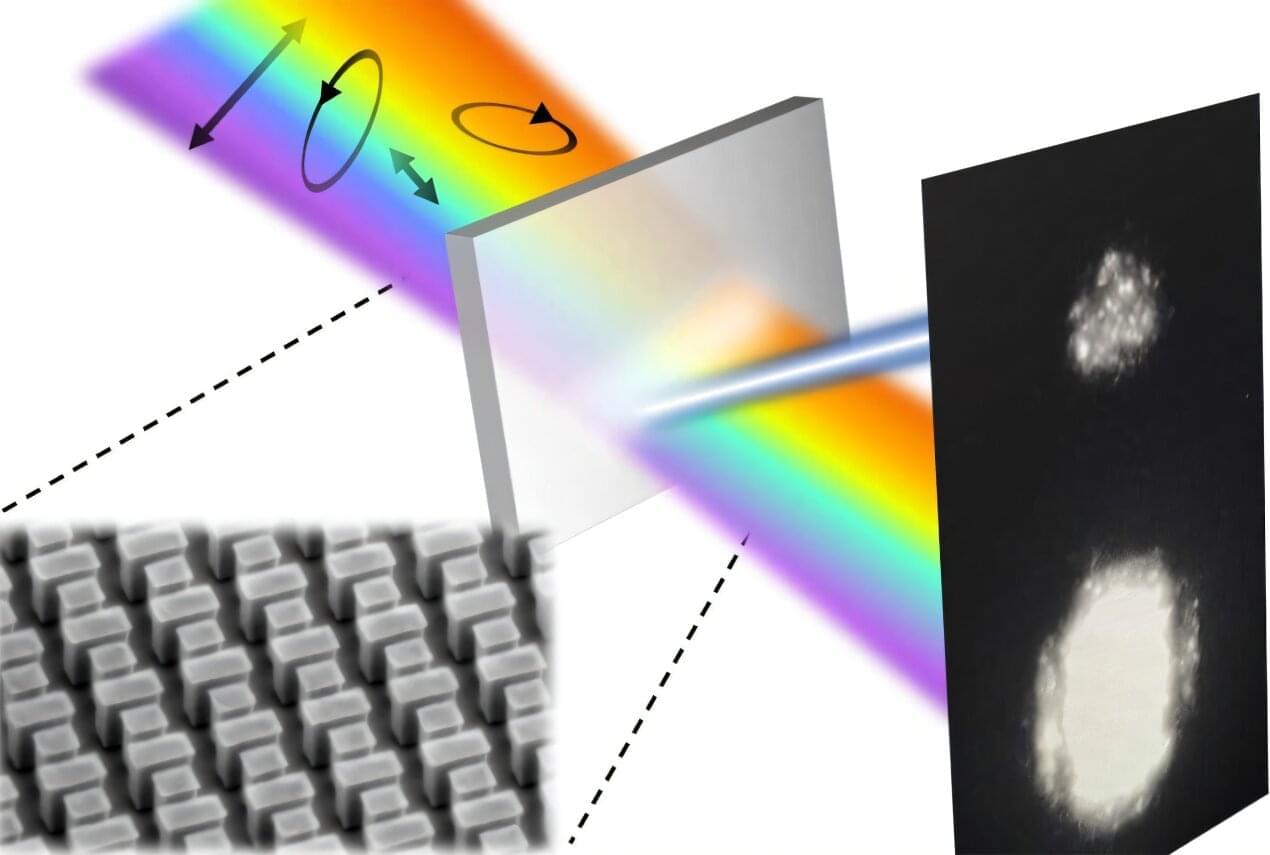

Without the ability to control infrared light waves, autonomous vehicles wouldn’t be able to quickly map their environment and keep “eyes” on the cars and pedestrians around them; augmented reality couldn’t display realistic 3D displays; doctors would lose an important tool for early cancer detection. Dynamic light control allows for upgrades to many existing systems, but complexities associated with fabricating programmable thermal devices hinder availability.

A new active metasurface, the electrically-programmable graphene field effect transistor (Gr-FET), from the labs of Sheng Shen and Xu Zhang in Carnegie Mellon University’s College of Engineering, enables the control of mid-infrared states across a wide range of wavelengths, directions, and polarizations. This enhanced control enables advancements in applications ranging from infrared camouflage to personalized health monitoring.

“For the first time, our active metasurface devices exhibited the monolithic integration of the rapidly modulated temperature, addressable pixelated imaging, and resonant infrared spectrum,” said Xiu Liu, postdoctoral associate in mechanical engineering and lead author of the paper published in Nature Communications. “This breakthrough will be of great interest to a wide range of infrared photonics, materials science, biophysics, and thermal engineering audiences.”