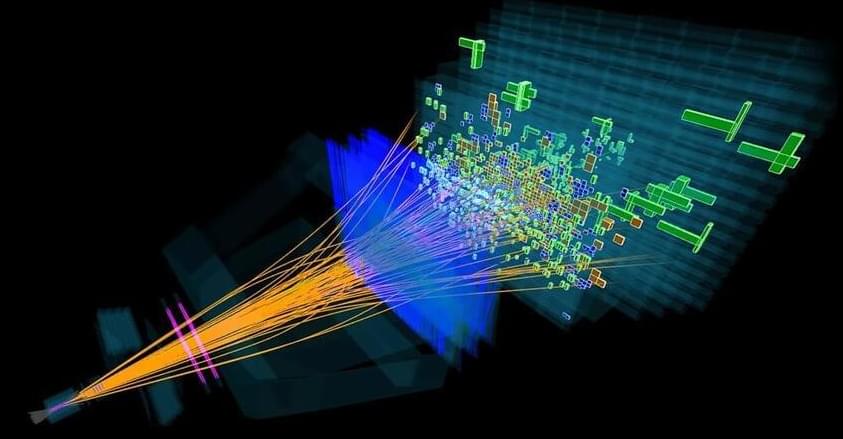

We have very specific predictions for how particles ought to decay. When we look at B-mesons all together, something vital doesn’t add up.

Category: particle physics – Page 70

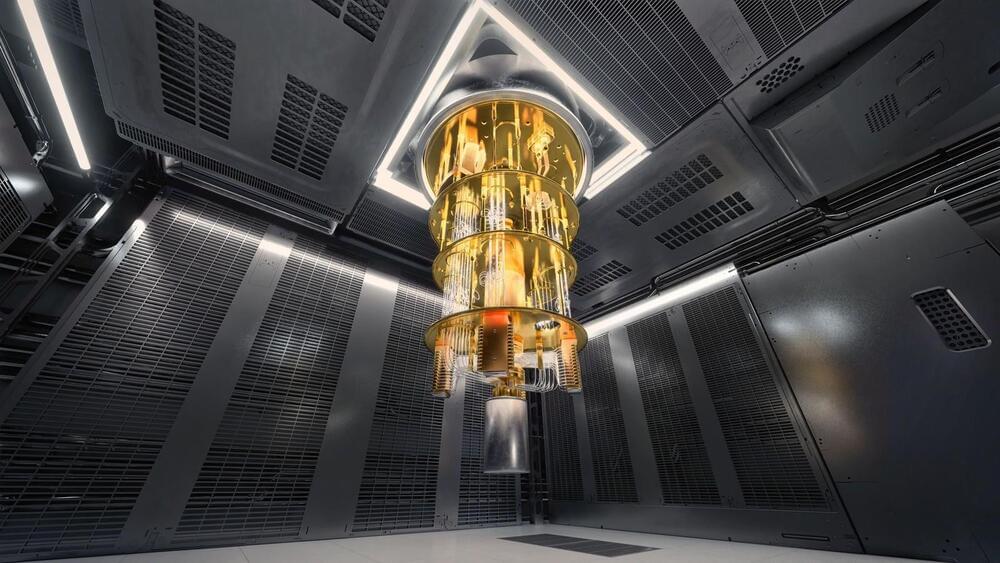

Each quantum computing trajectory faces unique developmental needs. Gate-based quantum computers require scalability, error correction and quantum gate fidelity improvements to achieve stable, accurate computations. The whole-systems approach needs advances in qubit connectivity and reductions in noise interference to boost computational reliability. Meanwhile, parsing-of-totality depends on advancing sensing techniques to harness atoms’ deeper patterns and potentiality.

Major investments are currently directed toward gate-based quantum computing, with IBM, Google and Microsoft leading the charge, aiming for universal quantum computation. However, the idea of universal quantum computation remains complex given that the parsing-of-totality approach suggests the possibility of new quantum patterns, properties and even principles that could require a conceptual shift as radical as the transition from classical bits to quantum qubits.

All three trajectories will play essential roles in the future of quantum computing. Gate-based systems may ultimately achieve universal applicability. Whole-systems quantum computing will continue to reframe a larger class of problems as complex adaptive systems requiring optimization to be solved. The parsing-based approaches will leverage novel quantum principles to spawn new quantum technologies.

This study focuses on topological time crystals, which sort of take this idea and make it a bit more complex (not that it wasn’t already). A topological time crystal’s behavior is determined by overall structure, rather than just a single atom or interaction. As ZME Science describes, if normal time crystals are a strand in a spider’s web, a topological time crystal is the entire web, and even the change of a single thread can affect the whole web. This “network” of connection is a feature, not a flaw, as it makes the topological crystal more resilient to disturbances—something quantum computers could definitely put to use.

In this experiment, scientists essentially embedded this behavior into a quantum computer, creating fidelities that exceeded previous quantum experiments. And although this all occurred in a prethermal regime, according to ZME Science, it’s still a big step forward towards potentially creating a more stable quantum computer capable of finally unlocking that future that always feels a decade from our grasp.

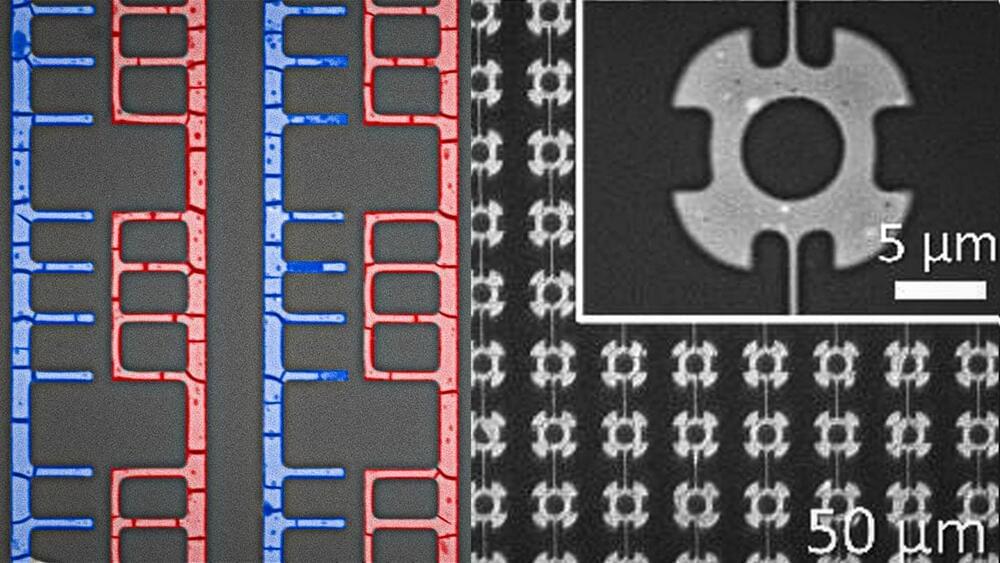

A remarkable proof-of-concept project has successfully manufactured nanoscale diodes and transistors using a fast, cheap new production technique in which liquid metal is directed to self-assemble into precise 3D structures.

In a peer-reviewed study due to be released in the journal Materials Horizons, a North Carolina State University team outlined and demonstrated the new method using an alloy of indium, bismuth and tin, known as Field’s metal.

The liquid metal was placed beside a mold, which the researchers say can be made in any size or shape. As it’s exposed to oxygen, a thin oxide layer forms on the surface of the metal. Then, a liquid is poured onto it, containing negatively-charged ligand molecules designed to pull individual metal atoms off that oxide layer as positively-charged ions, and bind with them.

What do motion detectors, self-driving cars, chemical analyzers and satellites have in common? They all contain detectors for infrared (IR) light. At their core and besides readout electronics, such detectors usually consist of a crystalline semiconductor material.

Such materials are challenging to manufacture: They often require extreme conditions, such as a very high temperature, and a lot of energy. Empa researchers are convinced that there is an easier way. A team led by Ivan Shorubalko from the Transport at the Nanoscale Interfaces laboratory is working on miniaturized IR detectors made of colloidal quantum dots.

The words “quantum dots” do not sound like an easy concept to most people. Shorubalko explains, “The properties of a material depend not only on its chemical composition, but also on its dimensions.” If you produce tiny particles of a certain material, they may have different properties than larger pieces of the very same material. This is due to quantum effects, hence the name “quantum dots.”

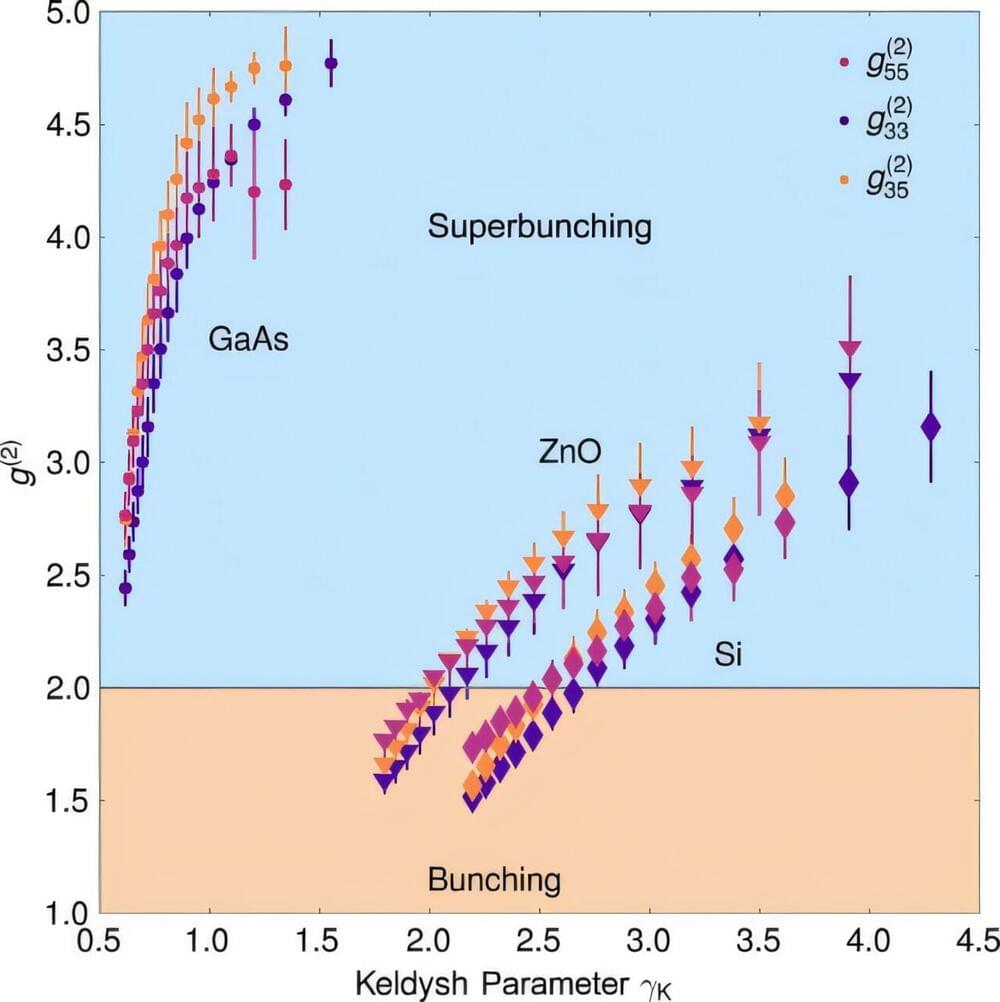

High harmonic generation (HHG) is a highly non-linear phenomenon where a system (for example, an atom) absorbs many photons of a laser and emits photons of much higher energy, whose frequency is a harmonic (that is, a multiple) of the incoming laser’s frequency. Historically, the theoretical description of this process was addressed from a semi-classical perspective, which treated matter (the electrons of the atoms) quantum-mechanically, but the incoming light classically. According to this approach, the emitted photons should also behave classically.

Despite this evident theoretical mismatch, the description was sufficient to carry out most of the experiments, and there was no apparent need to change the framework. Only in the last few years has the scientific community begun to explore whether the emitted light could actually exhibit a quantum behavior, which the semi-classical theory might have overlooked. Several theoretical groups, including the Quantum Optics Theory group at ICFO, have already shown that, under a full quantum description, the HHG process emits light with quantum features.

However, experimental validation of such predictions remained elusive until, recently, a team led by the Laboratoire d’Optique Appliquée (CNRS), in collaboration with ICREA Professor at ICFO Jens Biegert and other multiple institutions (Institut für Quantenoptik—Leibniz Universität Hannover, Fraunhofer Institute for Applied Optics and Precision Engineering IOF, Friedrich-Schiller-University Jena), demonstrated the quantum optical properties of high-harmonic generation in semiconductors. The results, appearing in PRX Quantum, align with the previous theoretical predictions about HHG.

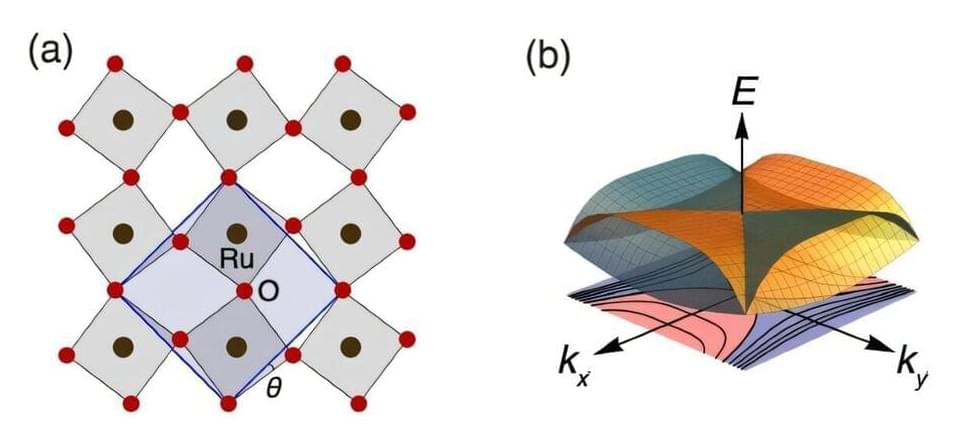

Physicists at Loughborough University have made an exciting breakthrough in understanding how to fine-tune the behavior of electrons in quantum materials poised to drive the next generation of advanced technologies.

Quantum materials, like bilayer graphene and strontium ruthenates, exhibit remarkable properties such as superconductivity and magnetism, which could revolutionize areas like computing and energy storage.

However, these materials are not yet widely used in real-world applications due to the challenges in understanding the complex behavior of their electrons—the particles that carry electrical charge.

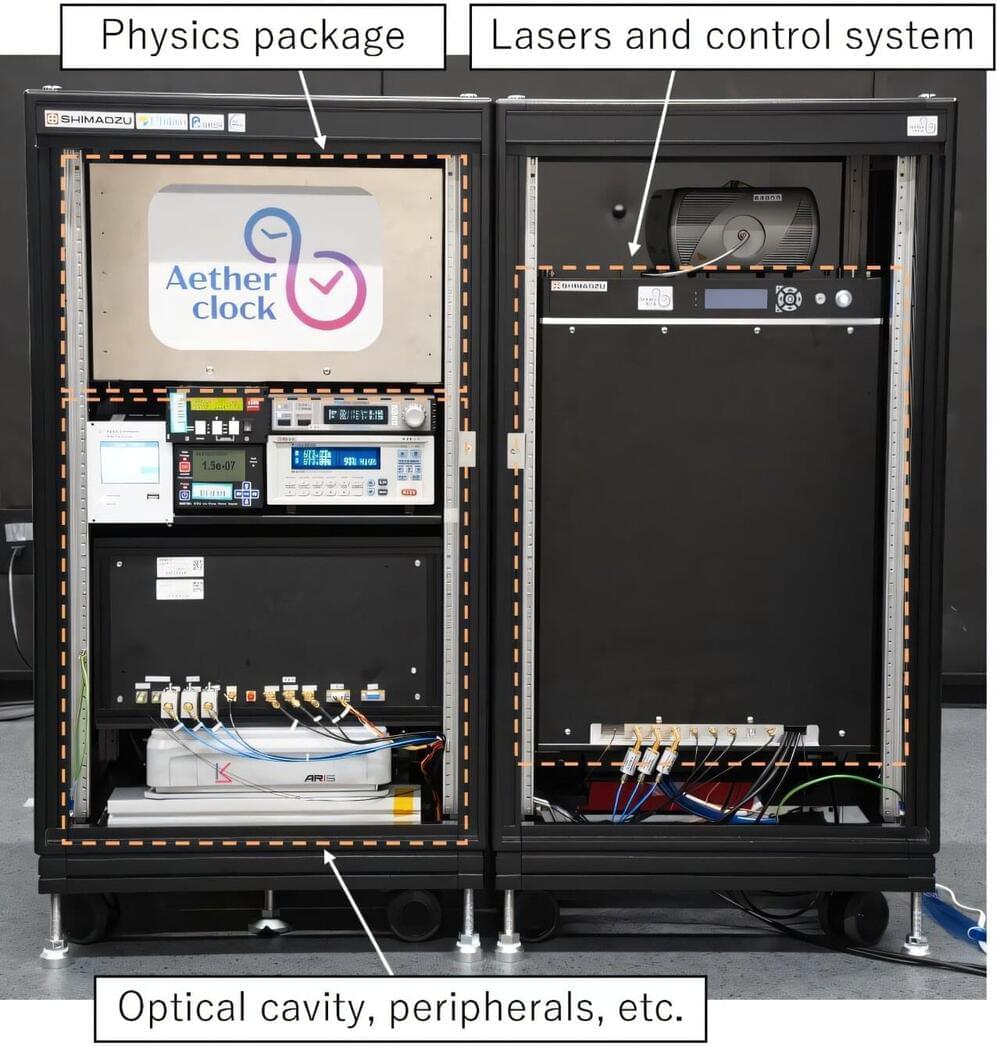

An optical lattice clock is a type of atomic clock that can be 100 times more accurate than cesium atomic clocks, the current standard for defining “seconds.” Its precision is equivalent to an error of approximately one second over 10 billion years. Owing to this exceptional accuracy, the optical lattice clock is considered a leading candidate for the next-generation “definition of the second.”

Professor Hidetoshi Katori from the Graduate School of Engineering at The University of Tokyo has achieved a milestone by developing the world’s first compact, robust, ultrahigh-precision optical lattice clock with a device capacity of 250L.

As part of this development, the physics package for spectroscopic measurement of atomic clock transitions, along with the laser and control system used for trapping and spectroscopy of atoms, was miniaturized. This innovation reduced the device volume from the traditional 920 to 250 L, approximately one-quarter of the previous size.

Particles called neutrons are typically very content inside atoms. They stick around for billions of years and longer inside some of the atoms that make up matter in our universe. But when neutrons are free and floating alone outside of an atom, they start to decay into protons and other particles. Their lifetime is short, lasting only about 15 minutes.

Physicists have spent decades trying to measure the precise lifetime of a neutron using two techniques, one involving bottles and the other beams. But the results from the two methods have not matched: they differ by about 9 seconds, which is significant for a particle that only lives about 15 minutes.

Now, in a new study published in the journal Physical Review Letters, a team of scientists has made the most precise measurement yet of a neutron’s lifetime using the bottle technique. The experiment, known as UCNtau (for Ultra Cold Neutrons tau, where tau refers to the neutron lifetime), has revealed that the neutron lives 14.629 minutes with an uncertainty of 0.005 minutes. This is a factor of two more precise than previous measurements made using either of the methods. While the results do not solve the mystery of why the bottle and beam methods disagree, they bring scientists closer to an answer.

Using a groundbreaking new technique, researchers have unveiled the first detailed image of a photon — a single particle of light — ever taken.