Quantum cognition is a new research program that uses mathematical principles from quantum theory as a framework to explain human cognition, including judgment and decision making, concepts, reasoning, memory, and perception. This research is not concerned with whether the brain is a quantum computer. Instead, it uses quantum theory as a fresh conceptual framework and a coherent set of formal tools for explaining puzzling empirical findings in psychology. In this introduction, we focus on two quantum principles as examples to show why quantum cognition is an appealing new theoretical direction for psychology: complementarity, which suggests that some psychological measures have to be made sequentially and that the context generated by the first measure can influence responses to the next one, producing measurement order effects, and superposition, which suggests that some psychological states cannot be defined with respect to definite values but, instead, that all possible values within the superposition have some potential for being expressed. We present evidence showing how these two principles work together to provide a coherent explanation for many divergent and puzzling phenomena in psychology. (PsycInfo Database Record © 2020 APA, all rights reserved)

Category: mathematics – Page 40

A small team of AI researchers at Microsoft reports that the company’s Orca-Math small language model outperforms other, larger models on standardized math tests. The group has published a paper on the arXiv preprint server describing their testing of Orca-Math on the Grade School Math 8K (GSM8K) benchmark and how it fared compared to well-known LLMs.

Many popular LLMs such as ChatGPT are known for their impressive conversational skills—less well known is that most of them can also solve math word problems. AI researchers have tested their abilities at such tasks by pitting them against the GSM8K, a dataset of 8,500 grade-school math word problems that require multistep reasoning to solve, along with their correct answers.

In this new study, the research team at Microsoft tested Orca-Math, an AI application developed by another team at Microsoft specifically designed to tackle math word problems, and compared the results with larger AI models.

Year 2010 😗😁

The world has waited with bated breath for three decades, and now finally a group of academics, engineers, and math geeks has discovered the number that explains life, the universe, and everything. That number is 20, and it’s the maximum number of moves it takes to solve a Rubik’s Cube.

Known as God’s Number, the magic number required about 35 CPU-years and a good deal of man-hours to solve. Why? Because there’s-1 possible positions of the cube, and the computer algorithm that finally cracked God’s Algorithm had to solve them all. (The terms God’s Number/Algorithm are derived from the fact that if God was solving a Cube, he/she/it would do it in the most efficient way possible. The Creator did not endorse this study, and could not be reached for comment.)

A full breakdown of the history of God’s Number as well as a full breakdown of the math is available here, but summarily the team broke the possible positions down into sets, then drastically cut the number of possible positions they had to solve for through symmetry (if you scramble a Cube randomly and then turn it upside down, you haven’t changed the solution).

Nice figures in this newly published survey on Scaled Optimal Transport with 200+ references.

👉

Optimal Transport (OT) is a mathematical framework that first emerged in the eighteenth century and has led to a plethora of methods for answering many theoretical and applied questions. The last decade has been a witness to the remarkable contributions of this classical optimization problem to machine learning. This paper is about where and how optimal transport is used in machine learning with a focus on the question of scalable optimal transport. We provide a comprehensive survey of optimal transport while ensuring an accessible presentation as permitted by the nature of the topic and the context. First, we explain the optimal transport background and introduce different flavors (i.e. mathematical formulations), properties, and notable applications.

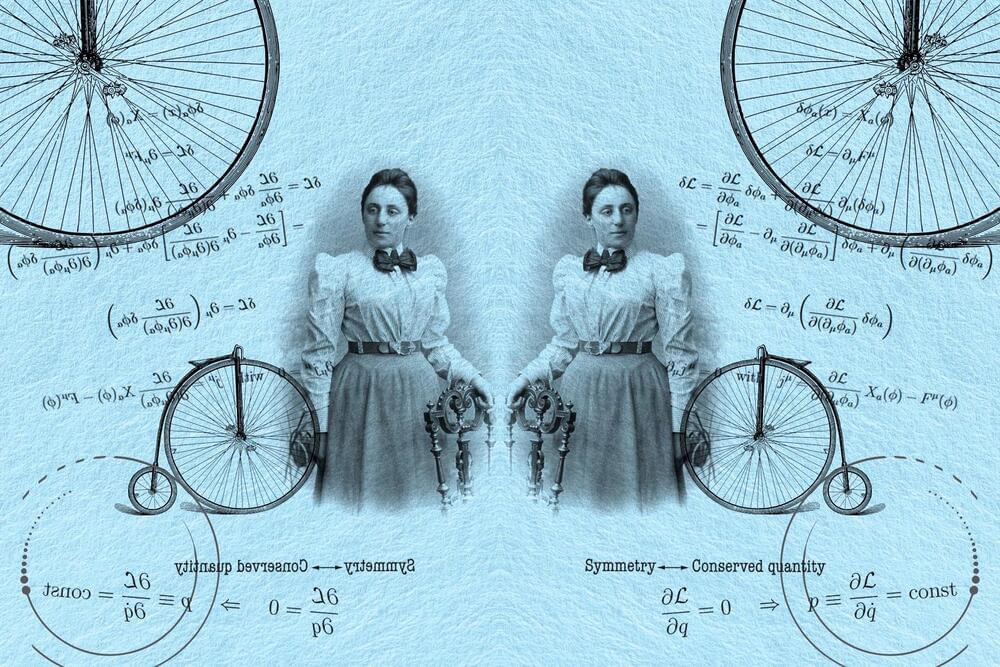

A century ago, Emmy Noether published a theorem that would change mathematics and physics. Here’s an all-ages guided tour through this groundbreaking idea.

Research unveils a mathematical model for ice nucleation, showing how surface angles affect water’s freezing point, with applications in snowmaking and cloud seeding.

From abstract-looking cloud formations to roars of snow machines on ski slopes, the transformation of liquid water into solid ice touches many facets of life. Water’s freezing point is generally accepted to be 32 degrees Fahrenheit. But that is due to ice nucleation — impurities in everyday water raise its freezing point to this temperature. Now, researchers unveil a theoretical model that shows how specific structural details on surfaces can influence water’s freezing point.

Research Findings and Their Implications.

“They remove some of the magic,” said Dimitris Papailiopoulos, a machine learning researcher at the University of Wisconsin, Madison. “That’s a good thing.”

Training Transformers

Large language models are built around mathematical structures called artificial neural networks. The many “neurons” inside these networks perform simple mathematical operations on long strings of numbers representing individual words, transmuting each word that passes through the network into another. The details of this mathematical alchemy depend on another set of numbers called the network’s parameters, which quantify the strength of the connections between neurons.

Scientists trained an AI system to think before speaking with a technique called QuietSTaR. The inner monologue improved common sense reasoning and doubled math performance.

Fermat’s last theorem puzzled mathematicians for centuries until it was finally proven in 1993. Now, researchers want to create a version of the proof that can be formally checked by a computer for any errors in logic.

By Alex Wilkins

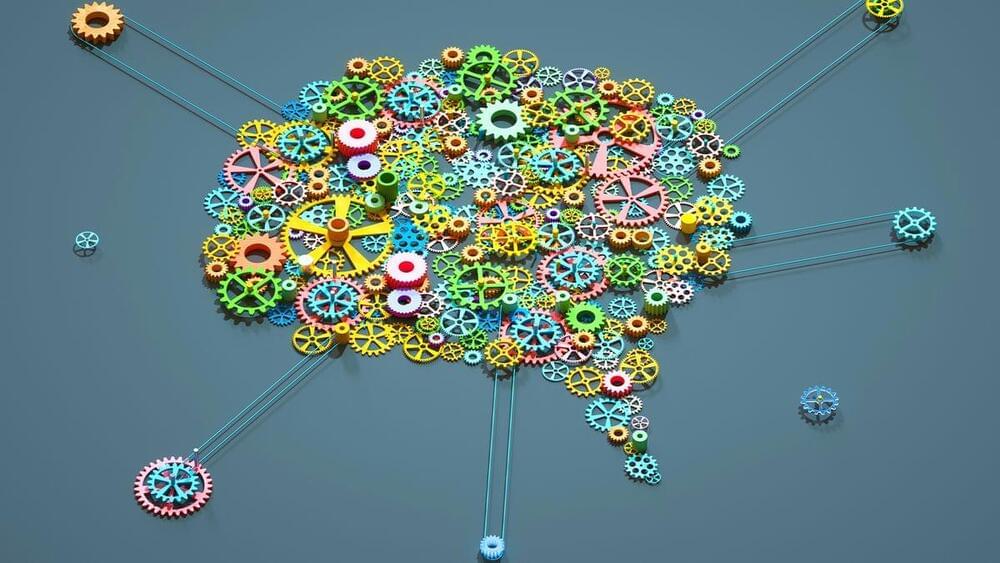

This paper introduces a novel theoretical framework for understanding consciousness, proposing a paradigm shift from traditional biological-centric views to a broader, universal perspective grounded in thermodynamics and systems theory. We posit that consciousness is not an exclusive attribute of biological entities but a fundamental feature of all systems exhibiting a particular form of intelligence. This intelligence is defined as the capacity of a system to efficiently utilize energy to reduce internal entropy, thereby fostering increased order and complexity. Supported by a robust mathematical model, the theory suggests that subjective experience, or what is often referred to as qualia, emerges from the intricate interplay of energy, entropy, and information within a system. This redefinition of consciousness and intelligence challenges existing paradigms and extends the potential for understanding and developing Artificial General Intelligence (AGI). The implications of this theory are vast, bridging gaps between cognitive science, artificial intelligence, philosophy, and physics, and providing a new lens through which to view the nature of consciousness itself.

Consciousness, traditionally viewed through the lens of biology and neurology, has long been a subject shrouded in mystery and debate. Philosophers, scientists, and thinkers have pondered over what consciousness is, how it arises, and why it appears to be a unique trait of certain biological organisms. The “hard problem” of consciousness, a term coined by philosopher David Chalmers, encapsulates the difficulty in explaining why and how physical processes in the brain give rise to subjective experiences.

Current research in cognitive science, neuroscience, and artificial intelligence offers various theories of consciousness, ranging from neural correlates of consciousness (NCCs) to quantum theories. However, these theories often face limitations in fully explaining the emergence and universality of consciousness.