Aug 23, 2024

Unconventional interface superconductor could benefit quantum computing

Posted by Shailesh Prasad in categories: computing, quantum physics

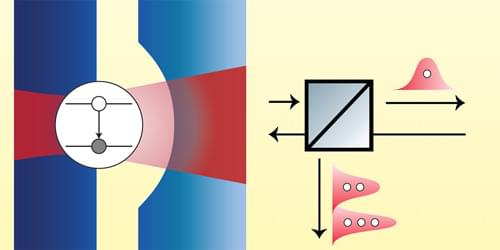

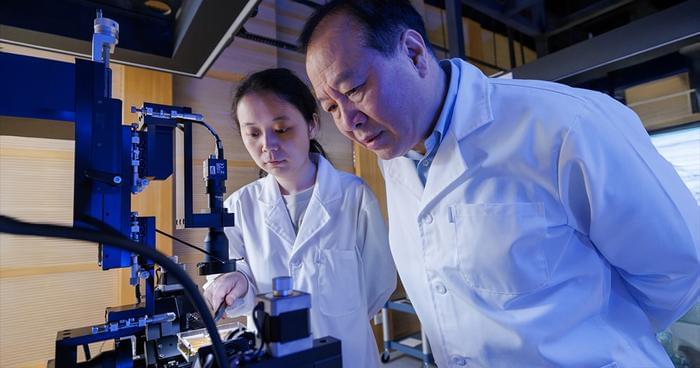

A multi-institutional team of scientists in the United States, led by physicist Peng Wei at the University of California, Riverside, has developed a new superconductor material that could potentially be used in quantum computing and be a candidate “topological superconductor.”