Sep 2, 2022

Revolutionizing image generation through AI: Turning text into images

Posted by Jose Ruben Rodriguez Fuentes in categories: information science, robotics/AI, supercomputing

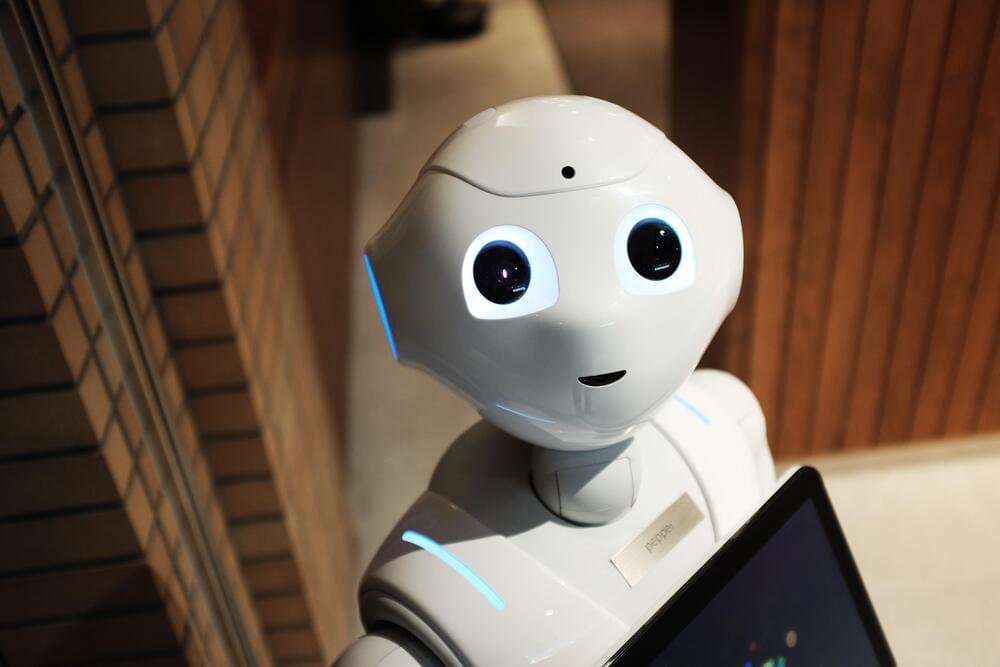

Creating images from text in seconds—and doing so with a conventional graphics card and without supercomputers? As fanciful as it may sound, this is made possible by the new Stable Diffusion AI model. The underlying algorithm was developed by the Machine Vision & Learning Group led by Prof. Björn Ommer (LMU Munich).

“Even for laypeople not blessed with artistic talent and without special computing know-how and computer hardware, the new model is an effective tool that enables computers to generate images on command. As such, the model removes a barrier to ordinary people expressing their creativity,” says Ommer. But there are benefits for seasoned artists as well, who can use Stable Diffusion to quickly convert new ideas into a variety of graphic drafts. The researchers are convinced that such AI-based tools will be able to expand the possibilities of creative image generation with paintbrush and Photoshop as fundamentally as computer-based word processing revolutionized writing with pens and typewriters.

In their project, the LMU scientists had the support of the start-up Stability. Ai, on whose servers the AI model was trained. “This additional computing power and the extra training examples turned our AI model into one of the most powerful image synthesis algorithms,” says the computer scientist.