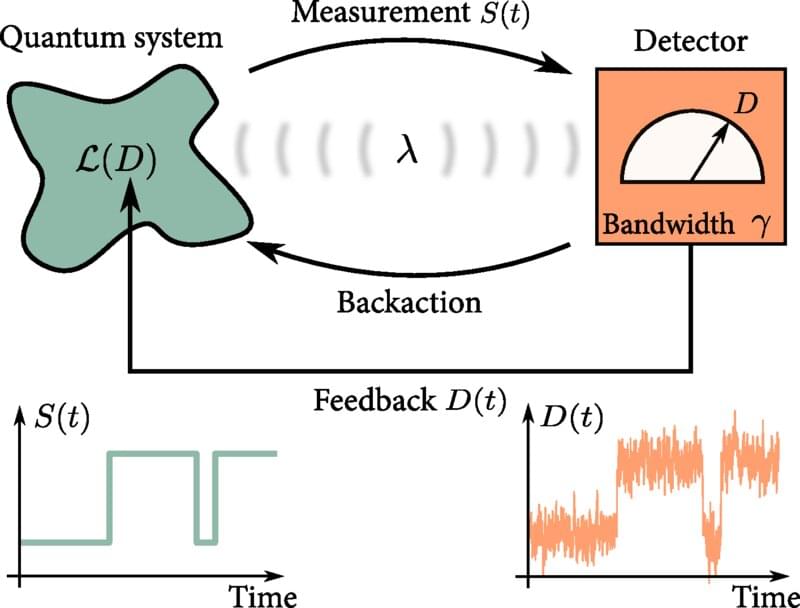

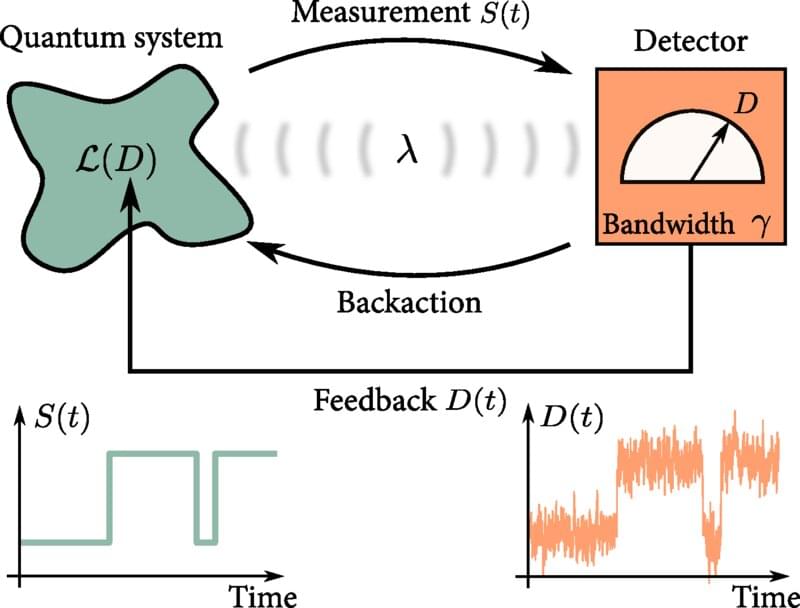

As the size of modern technology shrinks down to the nanoscale, weird quantum effects—such as quantum tunneling, superposition, and entanglement—become prominent. This opens the door to a new era of quantum technologies, where quantum effects can be exploited. Many everyday technologies make use of feedback control routinely; an important example is the pacemaker, which must monitor the user’s heartbeat and apply electrical signals to control it, only when needed. But physicists do not yet have an equivalent understanding of feedback control at the quantum level. Now, physicists have developed a “master equation” that will help engineers understand feedback at the quantum scale. Their results are published in the journal Physical Review Letters.

“It is vital to investigate how feedback control can be used in quantum technologies in order to develop efficient and fast methods for controlling quantum systems, so that they can be steered in real time and with high precision,” says co-author Björn Annby-Andersson, a quantum physicist at Lund University, in Sweden.

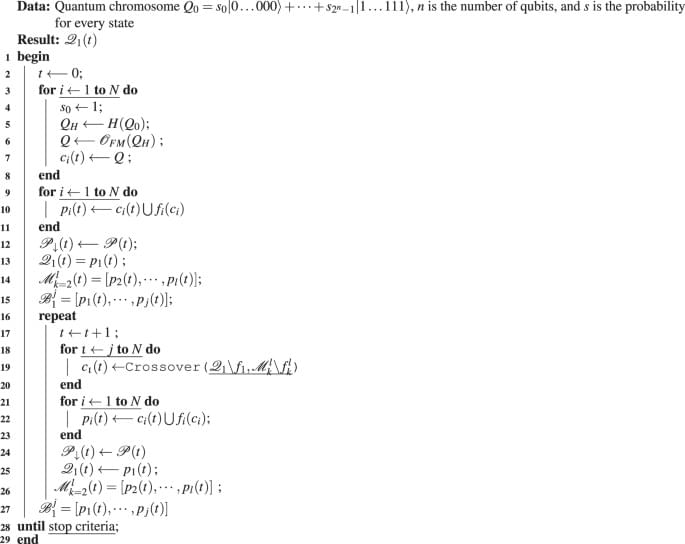

An example of a crucial feedback-control process in quantum computing is quantum error correction. A quantum computer encodes information on physical qubits, which could be photons of light, or atoms, for instance. But the quantum properties of the qubits are fragile, so it is likely that the encoded information will be lost if the qubits are disturbed by vibrations or fluctuating electromagnetic fields. That means that physicists need to be able to detect and correct such errors, for instance by using feedback control. This error correction can be implemented by measuring the state of the qubits and, if a deviation from what is expected is detected, applying feedback to correct it.