The bulk of the computing in state-of-the-art neural networks comprises linear operations, e.g., matrix-vector multiplications and convolutions. Linear operations can also play an important role in cryptography. While dedicated processors such as GPUs and TPUs are available for performing highly parallel linear operations, these devices are power-hungry, and the low bandwidth of electronics still limits their operation speed. Optics is better suited for such operations because of its inherent parallelism and large bandwidth and computation speed.

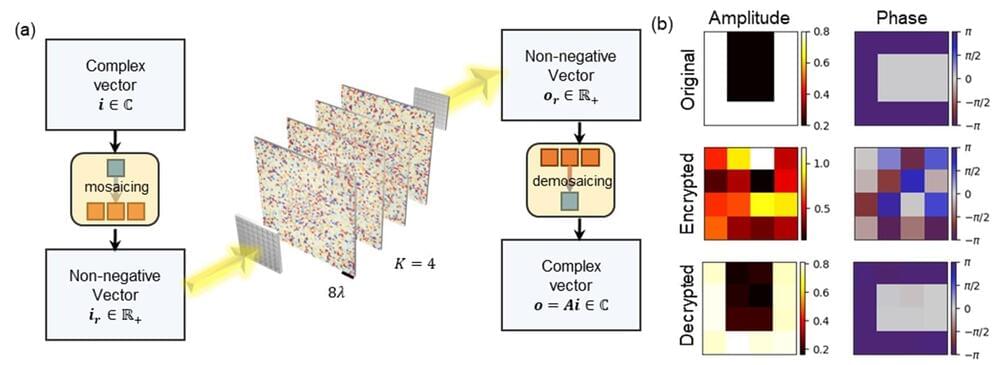

Built from a set of spatially engineered thin surfaces, diffractive deep neural networks (D2NN), also known as diffractive networks, form a recently emerging optical computing architecture capable of performing computational tasks passively at the speed of light propagation through an ultra-thin volume.

These task-specific all-optical computers are designed digitally through learning of the spatial features of their constituent diffractive surfaces. Following this one-time design process, the optimized surfaces are fabricated and assembled to form the physical hardware of the diffractive optical network.

Comments are closed.