Research in the field of machine learning and AI, now a key technology in practically every industry and company, is far too voluminous for anyone to read it all. This column, Perceptron, aims to collect some of the most relevant recent discoveries and papers — particularly in, but not limited to, artificial intelligence — and explain why they matter.

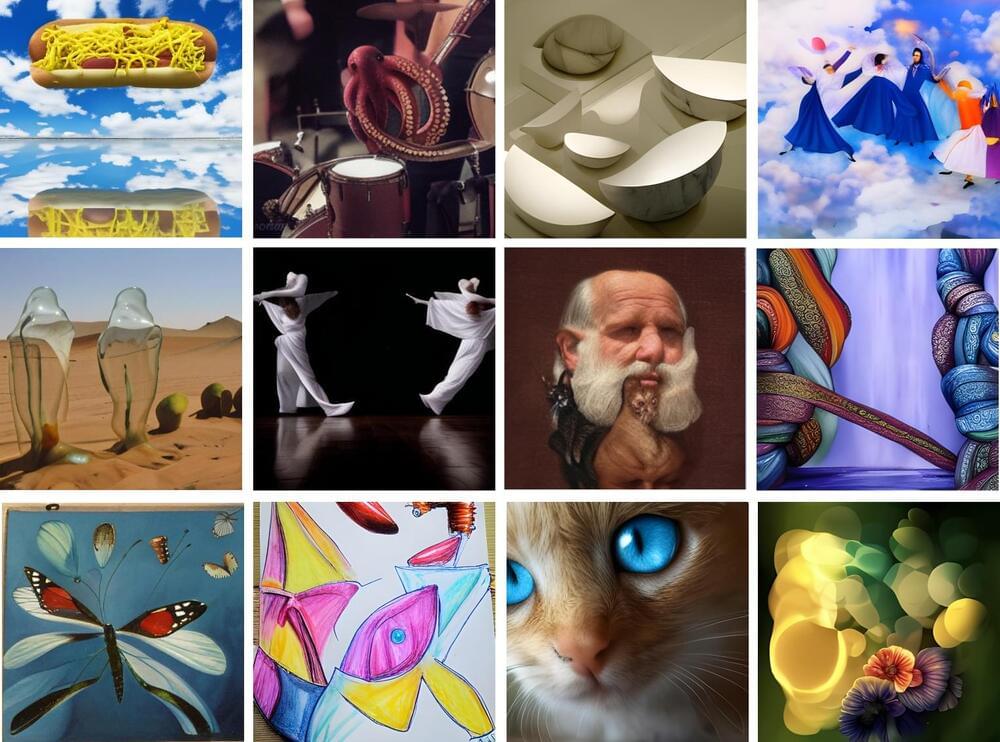

In this batch of recent research, Meta open-sourced a language system that it claims is the first capable of translating 200 different languages with “state-of-the-art” results. Not to be outdone, Google detailed a machine learning model, Minerva, that can solve quantitative reasoning problems including mathematical and scientific questions. And Microsoft released a language model, Godel, for generating “realistic” conversations that’s along the lines of Google’s widely publicized Lamda. And then we have some new text-to-image generators with a twist.

Meta’s new model, NLLB-200, is a part of the company’s No Language Left Behind initiative to develop machine-powered translation capabilities for most of the world’s languages. Trained to understand languages such as Kamba (spoken by the Bantu ethnic group) and Lao (the official language of Laos), as well as over 540 African languages not supported well or at all by previous translation systems, NLLB-200 will be used to translate languages on the Facebook News Feed and Instagram in addition to the Wikimedia Foundation’s Content Translation Tool, Meta recently announced.

Comments are closed.