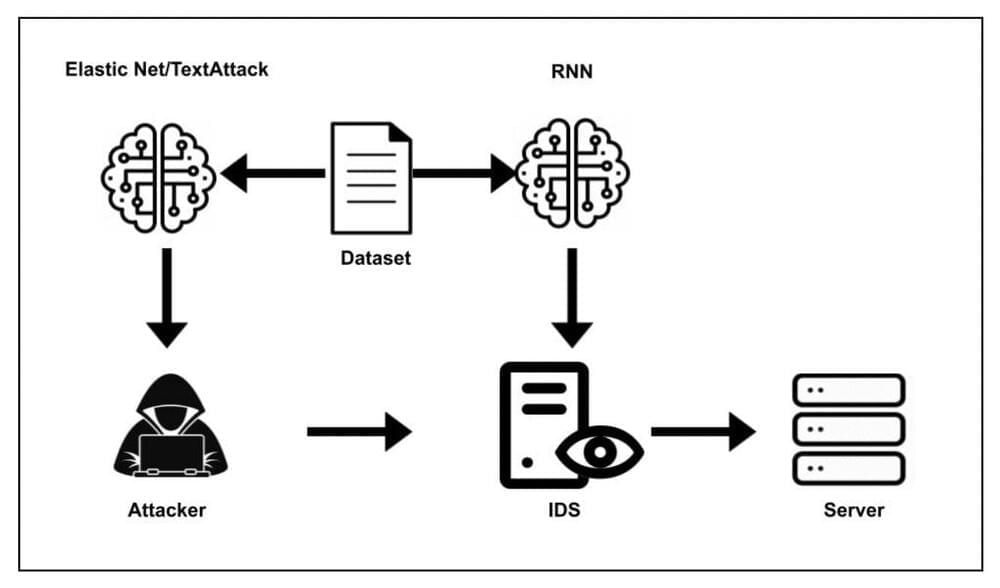

Deep learning techniques have recently proved to be highly promising for detecting cybersecurity attacks and determining their nature. Concurrently, many cybercriminals have been devising new attacks aimed at interfering with the functioning of various deep learning tools, including those for image classification and natural language processing.

Perhaps the most common among these attacks are adversarial attacks, which are designed to “fool” deep learning algorithms using data that has been modified, prompting them to classify it incorrectly. This can lead to the malfunctioning of many applications, biometric systems, and other technologies that operate through deep learning algorithms.

Several past studies have shown the effectiveness of different adversarial attacks in prompting deep neural networks (DNNs) to make unreliable and false predictions. These attacks include the Carlini & Wagner attack, the Deepfool attack, the fast gradient sign method (FGSM) and the Elastic-Net attack (ENA).

Comments are closed.