Among transhumanists, Nick Bostrom is well-known for promoting the idea of ‘existential risks’, potential harms which, were they come to pass, would annihilate the human condition altogether. Their probability may be relatively small, but the expected magnitude of their effects are so great, so Bostrom claims, that it is rational to devote some significant resources to safeguarding against them. (Indeed, there are now institutes for the study of existential risks on both sides of the Atlantic.) Moreover, because existential risks are intimately tied to the advancement of science and technology, their probability is likely to grow in the coming years.

Contrary to expectations, Bostrom is much less concerned with ecological suicide from humanity’s excessive carbon emissions than with the emergence of a superior brand of artificial intelligence – a ‘superintelligence’. This creature would be a human artefact, or at least descended from one. However, its self-programming capacity would have run amok in positive feedback, resulting in a maniacal, even self-destructive mission to rearrange the world in the image of its objectives. Such a superintelligence may appear to be quite ruthless in its dealings with humans, but that would only reflect the obstacles that we place, perhaps unwittingly, in the way of the realization of its objectives. Thus, this being would not conform to the science fiction stereotype of robots deliberately revolting against creators who are now seen as their inferiors.

I must confess that I find this conceptualisation of ‘existential risk’ rather un-transhumanist in spirit. Bostrom treats risk as a threat rather than as an opportunity. His risk horizon is precautionary rather than proactionary: He focuses on preventing the worst consequences rather than considering the prospects that are opened up by whatever radical changes might be inflicted by the superintelligence. This may be because in Bostrom’s key thought experiment, the superintelligence turns out to be the ultimate paper-clip collecting machine that ends up subsuming the entire planet to its task, destroying humanity along the way, almost as an afterthought.

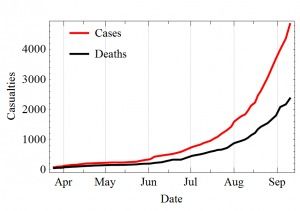

But is this really a good starting point for thinking about existential risk? Much more likely than total human annihilation is that a substantial portion of humanity – but not everyone – is eliminated. (Certainly this captures the worst case scenarios surrounding climate change.) The Cold War remains the gold standard for this line of thought. In the US, the RAND Corporation’s chief analyst, Herman Kahn — the model for Stanley Kubrick’s Dr Strangelove – routinely, if not casually, tossed off scenarios of how, say, a US-USSR nuclear confrontation would serve to increase the tolerance for human biological diversity, due to the resulting proliferation of genetic mutations. Put in more general terms, a severe social disruption provides a unique opportunity for pursuing ideals that might otherwise be thwarted by a ‘business as usual’ policy orientation.

Continue reading “Why Superintelligence May Not Help Us Think about Existential Risks -- or Transhumanism” »