Archive for the ‘supercomputing’ category: Page 10

Apr 30, 2024

RIKEN Selects IBM’s Next-Generation Quantum System to be Integrated with the Supercomputer Fugaku

Posted by Dan Breeden in categories: business, economics, information science, internet, quantum physics, supercomputing

ARMONK, N.Y., April 30, 2024 — Today, IBM (NYSE: IBM) has announced an agreement with RIKEN, a Japanese national research laboratory, to deploy IBM’s next-generation quantum computer architecture and best-performing quantum processor at the RIKEN Center for Computational Science in Kobe, Japan. It will be the only instance of a quantum computer co-located with the supercomputer Fugaku.

This agreement was executed as part of RIKEN’s existing project, supported by funding from the New Energy and Industrial Technology Development Organization (NEDO), an organization under Japan’s Ministry of Economy, Trade and Industry (METI)’s “Development of Integrated Utilization Technology for Quantum and Supercomputers” as part of the “Project for Research and Development of Enhanced Infrastructures for Post 5G Information and Communications Systems.” RIKEN has dedicated use of an IBM Quantum System Two architecture for the purpose of implementation of its project. Under the project RIKEN and its co-PI SoftBank Corp., with its collaborators, University of Tokyo, and Osaka University, aim to demonstrate the advantages of such hybrid computational platforms for deployment as services in the future post-5G era, based on the vision of advancing science and business in Japan.

In addition to the project, IBM will work to develop the software stack dedicated to generating and executing integrated quantum-classical workflows in a heterogeneous quantum-HPC hybrid computing environment. These new capabilities will be geared towards delivering improvements in algorithm quality and execution times.

Apr 29, 2024

Meet Nvidia CEO Jensen Huang, the man behind the $2 trillion company powering today’s artificial intelligence

Posted by Shailesh Prasad in categories: robotics/AI, supercomputing

AI that will be able to predict the weather 3,000 times faster than a supercomputer and a program that turns a text prompt into a virtual movie set. These are just two of the applications for AI-powered by Nvidia’s technology.

Jensen Huang leads Nvidia – a tech company with a skyrocketing stock and the most advanced technology for artificial intelligence.

Apr 25, 2024

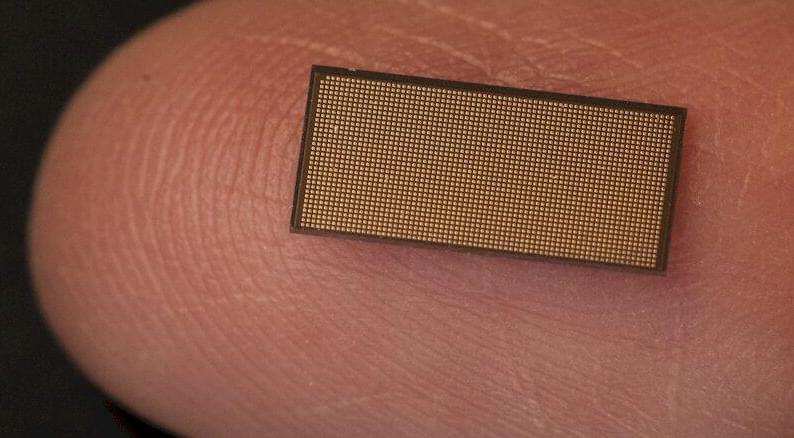

Sandia Pushes The Neuromorphic AI Envelope With Hala Point “Supercomputer”

Posted by Genevieve Klien in categories: robotics/AI, supercomputing

Not many devices in the datacenter have been etched with the Intel 4 process, which is the chip maker’s spin on 7 nanometer extreme ultraviolet immersion lithography. But Intel’s Loihi 2 neuromorphic processor is one of them, and Sandia National Laboratories is firing up a supercomputer with 1,152 of them interlinked to create what Intel is calling the largest neuromorphic system every assembled.

With Nvidia’s top-end “Blackwell” GPU accelerators now pushing up to 1,200 watts in their peak configurations, and require liquid cooling, and other accelerators no doubt following as their sockets get inevitably bigger as Moore’s Law scaling for chip making slows, this is a good time to take a step back and see what can be done with a reasonably scaled neuromorphic system, which not only has circuits which act more like real neurons used in real brains and also burn orders of magnitude less power than the XPUs commonly used in the datacenter for all kinds of compute.

First @NVIDIA DGX H200 in the world, hand-delivered to OpenAI and dedicated by Jensen “to advance AI, computing, and humanity”: v/ @gdb.

First @NVIDIA DGX H200 in the world, hand-delivered to OpenAI and dedicated by Jensen “to advance AI, computing, and humanity”: pic.twitter.com/rEJu7OTNGT

— Greg Brockman (@gdb) April 24, 2024

Apr 24, 2024

Russia Is Working on a 128-Core Supercomputing Platform: Report

Posted by Shailesh Prasad in category: supercomputing

Apr 24, 2024

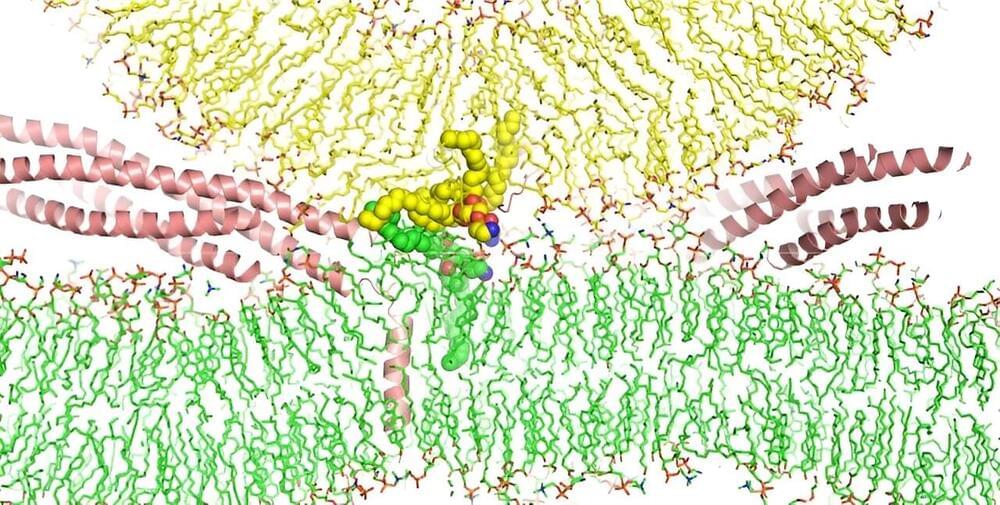

Supercomputer simulation reveals new mechanism for membrane fusion

Posted by Genevieve Klien in categories: biotech/medical, supercomputing

An intricate simulation performed by UT Southwestern Medical Center researchers using one of the world’s most powerful supercomputers sheds new light on how proteins called SNAREs cause biological membranes to fuse.

Their findings, reported in the Proceedings of the National Academy of Sciences, suggest a new mechanism for this ubiquitous process and could eventually lead to new treatments for conditions in which membrane fusion is thought to go awry.

“Biology textbooks say that SNAREs bring membranes together to cause fusion, and many people were happy with that explanation. But not me, because membranes brought into contact normally do not fuse. Our simulation goes deeper to show how this important process takes place,” said study leader Jose Rizo-Rey (“Josep Rizo”), Ph.D., Professor of Biophysics, Biochemistry, and Pharmacology at UT Southwestern.

Apr 24, 2024

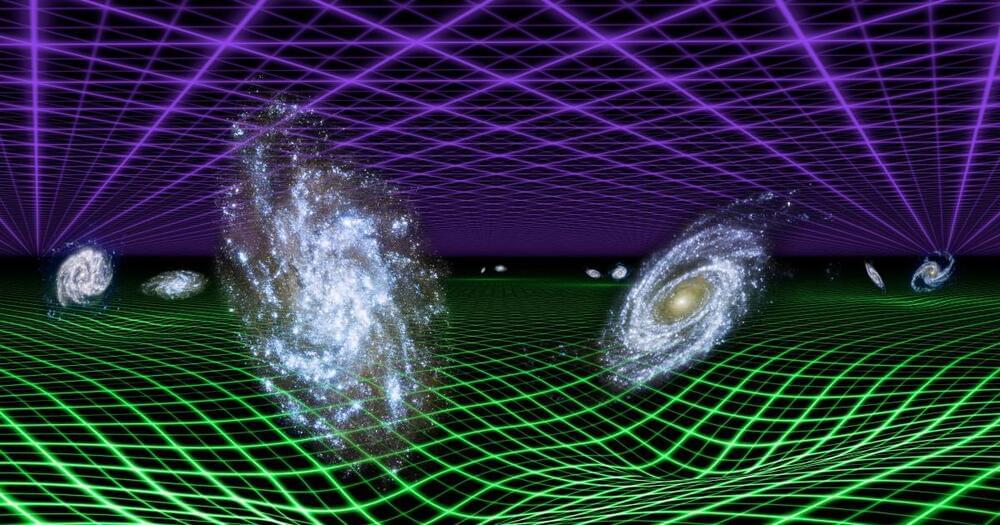

The basis of the universe may not be energy or matter but information

Posted by Dan Breeden in categories: particle physics, supercomputing

Apr 22, 2024

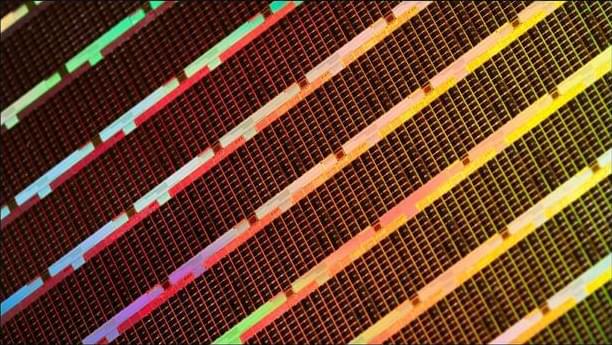

NVIDIA To Collaborate With Japan On Their Cutting-Edge ABCI-Q Quantum Supercomputer

Posted by Genevieve Klien in categories: quantum physics, robotics/AI, supercomputing

NVIDIA is all set to aid Japan in building the nation’s hybrid quantum supercomputer, fueled by the immense power of its HPC & AI GPUs.

Japan To Rapidly Progressing In Quantum and AI Computing Segments Through Large-Scale Developments With The Help of NVIDIA’s AI & HPC Infrastructure

Nikkei Asia reports that the National Institute of Advanced Industrial and Technology (AIST), Japan, is building a quantum supercomputer to excel in this particular segment for prospects. The new project is called ABCI-Q & will be entirely powered by NVIDIA’s accelerated & quantum computing platforms, hinting towards high-performance and efficiency results out of the system. The Japanese supercomputer will be built in collaboration with Fujitsu as well.