Apr 9, 2023

The Red Pill of Machine Learning

Posted by Dan Breeden in categories: information science, mathematics, mobile phones, robotics/AI, transportation

Fascinating proposal for methodology.

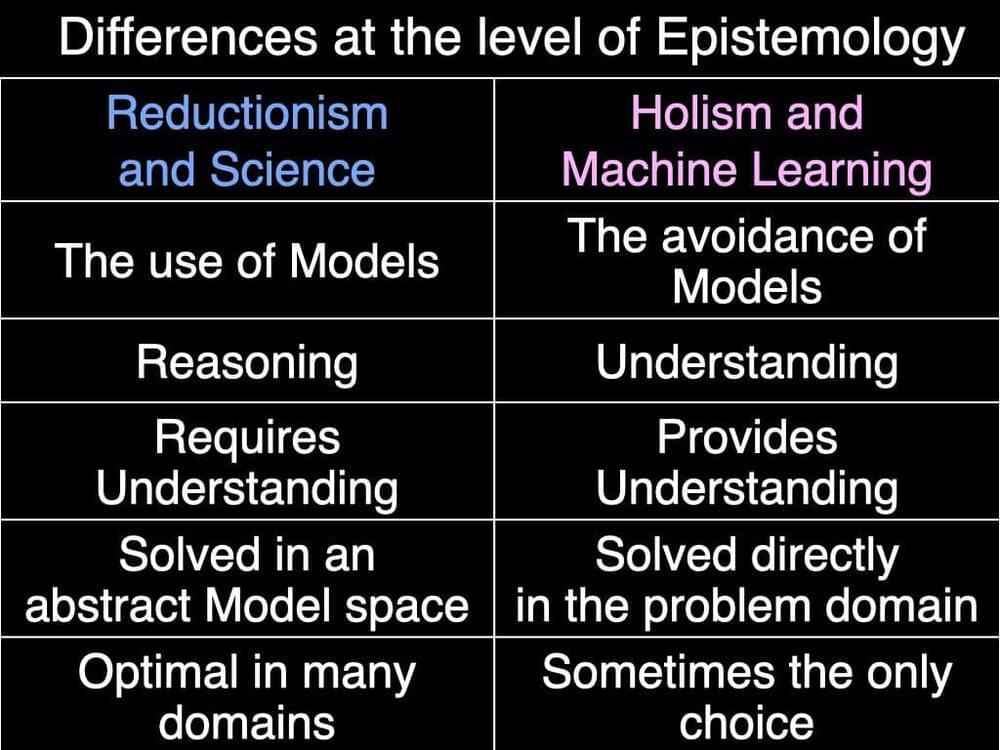

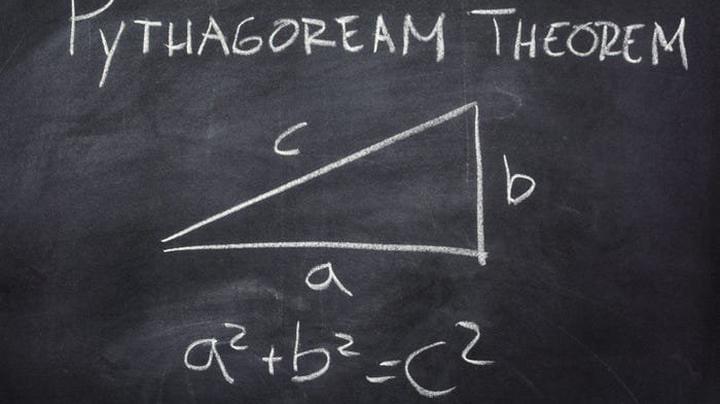

Models are scientific models, theories, hypotheses, formulas, equations, naïve models based on personal experiences, superstitions (!), and traditional computer programs. In a Reductionist paradigm, these Models are created by humans, ostensibly by scientists, and are then used, ostensibly by engineers, to solve real-world problems. Model creation and Model use both require that these humans Understand the problem domain, the problem at hand, the previously known shared Models available, and how to design and use Models. A Ph.D. degree could be seen as a formal license to create new Models[2]. Mathematics can be seen as a discipline for Model manipulation.