Archive for the ‘information science’ category: Page 261

Apr 16, 2018

Google made an AR microscope that can help detect cancer

Posted by Genevieve Klien in categories: augmented reality, biotech/medical, information science, robotics/AI

In a talk given today at the American Association for Cancer Research’s annual meeting, Google researchers described a prototype of an augmented reality microscope that could be used to help physicians diagnose patients. When pathologists are analyzing biological tissue to see if there are signs of cancer — and if so, how much and what kind — the process can be quite time-consuming. And it’s a practice that Google thinks could benefit from deep learning tools. But in many places, adopting AI technology isn’t feasible. The company, however, believes this microscope could allow groups with limited funds, such as small labs and clinics, or developing countries to benefit from these tools in a simple, easy-to-use manner. Google says the scope could “possibly help accelerate and democratize the adoption of deep learning tools for pathologists around the world.”

The microscope is an ordinary light microscope, the kind used by pathologists worldwide. Google just tweaked it a little in order to introduce AI technology and augmented reality. First, neural networks are trained to detect cancer cells in images of human tissue. Then, after a slide with human tissue is placed under the modified microscope, the same image a person sees through the scope’s eyepieces is fed into a computer. AI algorithms then detect cancer cells in the tissue, which the system then outlines in the image seen through the eyepieces (see image above). It’s all done in real time and works quickly enough that it’s still effective when a pathologist moves a slide to look at a new section of tissue.

Continue reading “Google made an AR microscope that can help detect cancer” »

Apr 13, 2018

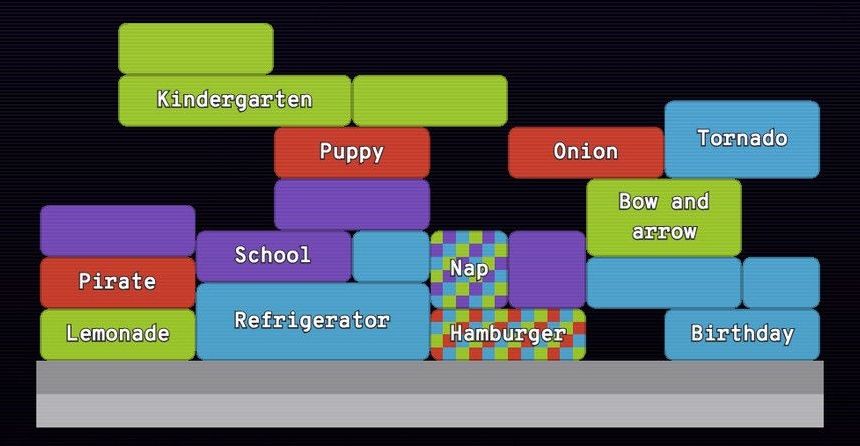

Google’s latest AI experiments let you talk to books and test word association skills

Posted by Genevieve Klien in categories: business, engineering, habitats, information science, Ray Kurzweil, robotics/AI

Google today announced a pair of new artificial intelligence experiments from its research division that let web users dabble in semantics and natural language processing. For Google, a company that’s primary product is a search engine that traffics mostly in text, these advances in AI are integral to its business and to its goals of making software that can understand and parse elements of human language.

The website will now house any interactive AI language tools, and Google is calling the collection Semantic Experiences. The primary sub-field of AI it’s showcasing is known as word vectors, a type of natural language understanding that maps “semantically similar phrases to nearby points based on equivalence, similarity or relatedness of ideas and language.” It’s a way to “enable algorithms to learn about the relationships between words, based on examples of actual language usage,” says Ray Kurzweil, notable futurist and director of engineering at Google Research, and product manager Rachel Bernstein in a blog post. Google has published its work on the topic in a paper here, and it’s also made a pre-trained module available on its TensorFlow platform for other researchers to experiment with.

The first of the two publicly available experiments released today is called Talk to Books, and it quite literally lets you converse with a machine learning-trained algorithm that surfaces answers to questions with relevant passages from human-written text. As described by Kurzweil and Bernstein, Talk to Books lets you “make a statement or ask a question, and the tool finds sentences in books that respond, with no dependence on keyword matching.” The duo add that, “In a sense you are talking to the books, getting responses which can help you determine if you’re interested in reading them or not.”

Apr 13, 2018

What is relativity? Einstein’s mind-bending theory explained

Posted by Genevieve Klien in categories: information science, nuclear energy

Albert Einstein is famous for his theory of relativity, and GPS navigation and nuclear energy would be impossible without the equation e=mc2.

Apr 13, 2018

Using an algorithm to reduce energy bills—rain or shine

Posted by Bill Kemp in categories: information science, robotics/AI, solar power, sustainability

Researchers proposed implementing the residential energy scheduling algorithm by training three action dependent heuristic dynamic programming (ADHDP) networks, each one based on a weather type of sunny, partly cloudy, or cloudy. ADHDP networks are considered ‘smart,’ as their response can change based on different conditions.

“In the future, we expect to have various types of power supplies to every household including the grid, windmills, solar panels and biogenerators. The issues here are the varying nature of these power sources, which do not generate electricity at a stable rate,” said Derong Liu, a professor with the School of Automation at the Guangdong University of Technology in China and an author on the paper. “For example, power generated from windmills and solar panels depends on the weather, and they vary a lot compared to the more stable power supplied by the grid. In order to improve these power sources, we need much smarter algorithms in managing/scheduling them.”

The details were published on the January 10th issue of IEEE/CAA Journal of Automatica Sinica, a joint bimonthly publication of the IEEE and the Chinese Association of Automation.

Apr 13, 2018

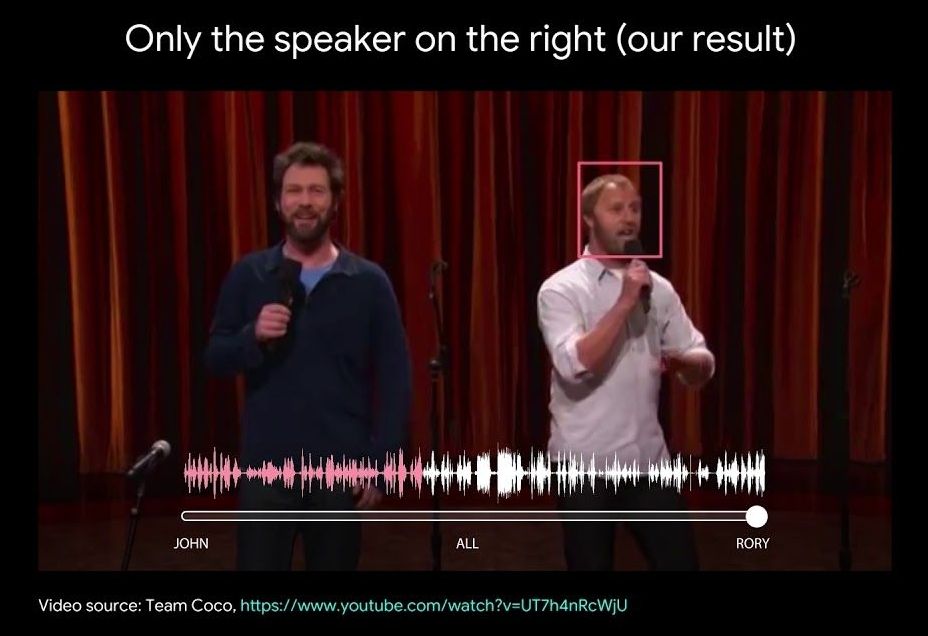

Looking to Listen: Audio-Visual Speech Separation

Posted by Klaus Baldauf in categories: biotech/medical, information science, robotics/AI

People are remarkably good at focusing their attention on a particular person in a noisy environment, mentally “muting” all other voices and sounds. Known as the cocktail party effect, this capability comes natural to us humans. However, automatic speech separation — separating an audio signal into its individual speech sources — while a well-studied problem, remains a significant challenge for computers.

In “Looking to Listen at the Cocktail Party”, we present a deep learning audio-visual model for isolating a single speech signal from a mixture of sounds such as other voices and background noise. In this work, we are able to computationally produce videos in which speech of specific people is enhanced while all other sounds are suppressed. Our method works on ordinary videos with a single audio track, and all that is required from the user is to select the face of the person in the video they want to hear, or to have such a person be selected algorithmically based on context. We believe this capability can have a wide range of applications, from speech enhancement and recognition in videos, through video conferencing, to improved hearing aids, especially in situations where there are multiple people speaking.

Continue reading “Looking to Listen: Audio-Visual Speech Separation” »

Apr 12, 2018

A Global Arms Race for Killer Robots Is Transforming Warfare

Posted by Amnon H. Eden in categories: drones, government, information science, military, robotics/AI

We are now on the brink of a “third revolution in warfare,” heralded by killer robots — the fully autonomous weapons that could decide who to target and kill… without human input.

Over the weekend, experts on military artificial intelligence from more than 80 world governments converged on the U.N. offices in Geneva for the start of a week’s talks on autonomous weapons systems. Many of them fear that after gunpowder and nuclear weapons, we are now on the brink of a “third revolution in warfare,” heralded by killer robots — the fully autonomous weapons that could decide who to target and kill without human input. With autonomous technology already in development in several countries, the talks mark a crucial point for governments and activists who believe the U.N. should play a key role in regulating the technology.

The meeting comes at a critical juncture. In July, Kalashnikov, the main defense contractor of the Russian government, announced it was developing a weapon that uses neural networks to make “shoot-no shoot” decisions. In January 2017, the U.S. Department of Defense released a video showing an autonomous drone swarm of 103 individual robots successfully flying over California. Nobody was in control of the drones; their flight paths were choreographed in real-time by an advanced algorithm. The drones “are a collective organism, sharing one distributed brain for decision-making and adapting to each other like swarms in nature,” a spokesman said. The drones in the video were not weaponized — but the technology to do so is rapidly evolving.

Continue reading “A Global Arms Race for Killer Robots Is Transforming Warfare” »

Apr 11, 2018

US approves artificial-intelligence device for diabetic eye problems

Posted by Genevieve Klien in categories: biotech/medical, information science, robotics/AI

US regulators Wednesday approved the first device that uses artificial intelligence to detect eye damage from diabetes, allowing regular doctors to diagnose the condition without interpreting any data or images.

The device, called IDx-DR, can diagnose a condition called diabetic retinopathy, the most common cause of vision loss among the more than 30 million Americans living with diabetes.

Its software uses an artificial intelligence algorithm to analyze images of the eye, taken with a retinal camera called the Topcon NW400, the FDA said.

Continue reading “US approves artificial-intelligence device for diabetic eye problems” »

Apr 11, 2018

FDA approves AI-powered diagnostic that doesn’t need a doctor’s help

Posted by Genevieve Klien in categories: biotech/medical, information science, robotics/AI

Marking a new era of “diagnosis by software,” the US Food and Drug Administration on Wednesday gave permission to a company called IDx to market an AI-powered diagnostic device for ophthalmology.

What it does: The software is designed to detect greater than a mild level of diabetic retinopathy, which causes vision loss and affects 30 million people in the US. It occurs when high blood sugar damages blood vessels in the retina.

How it works: The program uses an AI algorithm to analyze images of the adult eye taken with a special retinal camera. A doctor uploads the images to a cloud server, and the software then delivers a positive or negative result.

Continue reading “FDA approves AI-powered diagnostic that doesn’t need a doctor’s help” »

Apr 10, 2018

Human bias is a huge problem for AI. Here’s how we’re going to fix it

Posted by Genevieve Klien in categories: information science, robotics/AI

Machines don’t actually have bias. AI doesn’t ‘want’ something to be true or false for reasons that can’t be explained through logic. Unfortunately human bias exists in machine learning from the creation of an algorithm to the interpretation of data – and until now hardly anyone has tried to solve this huge problem.

A team of scientists from Czech Republic and Germany recently conducted research to determine the effect human cognitive bias has on interpreting the output used to create machine learning rules.

The team’s white paper explains how 20 different cognitive biases could potentially alter the development of machine learning rules and proposes methods for “debiasing” them.

Continue reading “Human bias is a huge problem for AI. Here’s how we’re going to fix it” »