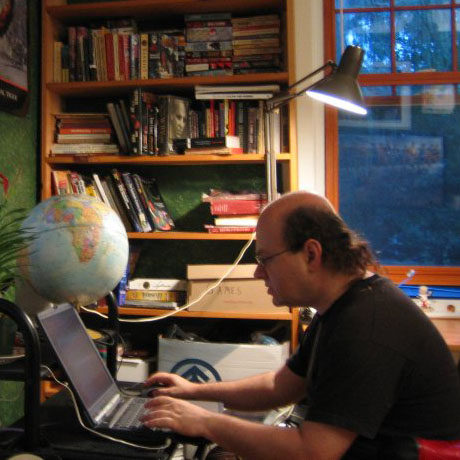

Dr. Michael A. Bukatin

Michael A. Bukatin, Ph.D. is Senior Software Engineer at Nokia.

Mishka’s theoretical interests concentrate in applications of continuous mathematics to computing — domains for denotational semantics give the prime example of such applications. Another important area of such applications is realistic neural networks.

His practical interests are in programming language design and implementation, in methods and tools for software engineering, and in audio-visual computer-based cognitive tools.

His AI-related interests include automation of programming, optimization over spaces of programs, machine learning over spaces of programs (“symbolic regression”), algebra and analysis over spaces of programs, neuroscience, spiking neural nets, mathematics of heterogeneous spaces, philosophy of general AI (“AGI”), including research related to the “hard problem of consciousness”, problems related to uploading, and problems related to the technological singularity and friendly AI.

Mishka coauthored An Intelligent Theory of Cost for Partial Metric Spaces, A confidence-based framework for disambiguating geographic terms, Partial Metric Spaces, A view of thermodynamics of hydration emerging from continuum studies, Magnitude of Hydration Entropies of Nonpolar and Polar Molecules, and On the nature of correspondence between partial metrics and fuzzy equalities, and authored Logic of Fixed Points and Scott Topology. Learn more about his computer science papers, his computational chemistry papers, and his essays.

There is a wide range of approaches to the issue of AI friendliness and not much consensus between researchers in this field. This is quite appropriate given how unpredictable is the situation in the field of software, and how little if anything can we understand about the post-singularity dynamics.

On one pole there are people who hope for and work towards a formal framework for developing friendly AI and formal guarantees of friendliness. This seems to be a highly attractive approach, despite rather widespread doubts regarding its feasibility, but there is a strong chance that it will not be competitive enough compared to the other, more straight routes to general AI.

Motivated by rather slow progress in formal methods for AI friendliness, alternative approaches look at various possible flavors of AI and compare their chances of being friendly. There is a wide diversity of opinion here as well.

Then there is another pole — the opposite one to the pole of formal guarantees — which is somewhat underrepresented. It is an approach which might seem strange at the first glance. Can a text (whether in a natural language or in some formal language), or another chunk of information, which is not a part of the “winning AGI system” itself, but merely a small part of its input (e.g. downloaded by the AGI in question from the Web) make a difference where friendliness is concerned?

Mishka wants to add a few words about this underrepresented pole, with the hope to encourage people to think more about it. First of all, this approach relies on an assumption that the AGI in question will have reasonable capabilities to understand, interpret, and use human-created texts or other artifacts. If that is not the case, our chances for friendliness will probably be quite low. However, the ability to meaningfully handle human-created texts and other artifacts seems to provide a significant initial competitive advantage for AGIs. This inspires hope that such an ability will be present.

We note that from an “ordinary human point of view” this approach makes more sense than enforcement of formal constraints. It is natural to try to talk to your child (who in this case is supposed to be much smarter than you), and this appears somehow “more decent” than manipulating the situation in order to constrain the behavior of your (exceedingly smart) child.

We do see an occasional “letter to a future general AI” about ethical issues, and any such letter is an attempt at this approach.

This is also not a bad approach from a computer science viewpoint: data and programs are the same thing, hence any input to a program should be viewed as a chunk of code in the language defined by the program in question. But Mishka has not seen any attempts to analyze the situation for different possible architectures of AI, or to take into account the vast sea of inputs of various kinds (including the body of all texts on friendly AI!), or anything like that. It is such a “metalevel analysis” of this approach that seems to be completely missing.

Mishka earned his M.S. in Applied Mathematics at the Moscow Institute of Railroad Engineers in 1986. He earned his Ph.D. in Computer Science at Brandeis University in 2002 with the dissertation Mathematics of Domains.