Jan 7, 2017

Moore’s Law Will Soon End, but Progress Doesn’t Have to

Posted by Karen Hurst in categories: mobile phones, supercomputing

In 1965, Intel co-founder Gordon Moore published a remarkably prescient paper which observed that the number of transistors on an integrated circuit was doubling every two years and predicted that this pace would lead to computers becoming embedded in homes, cars and communication systems.

That simple idea, known today as Moore’s Law, has helped power the digital revolution. As computing performance has become exponentially cheaper and more robust, we have been able to do a lot more with it. Even a basic smartphone today is more powerful than the supercomputers of past generations.

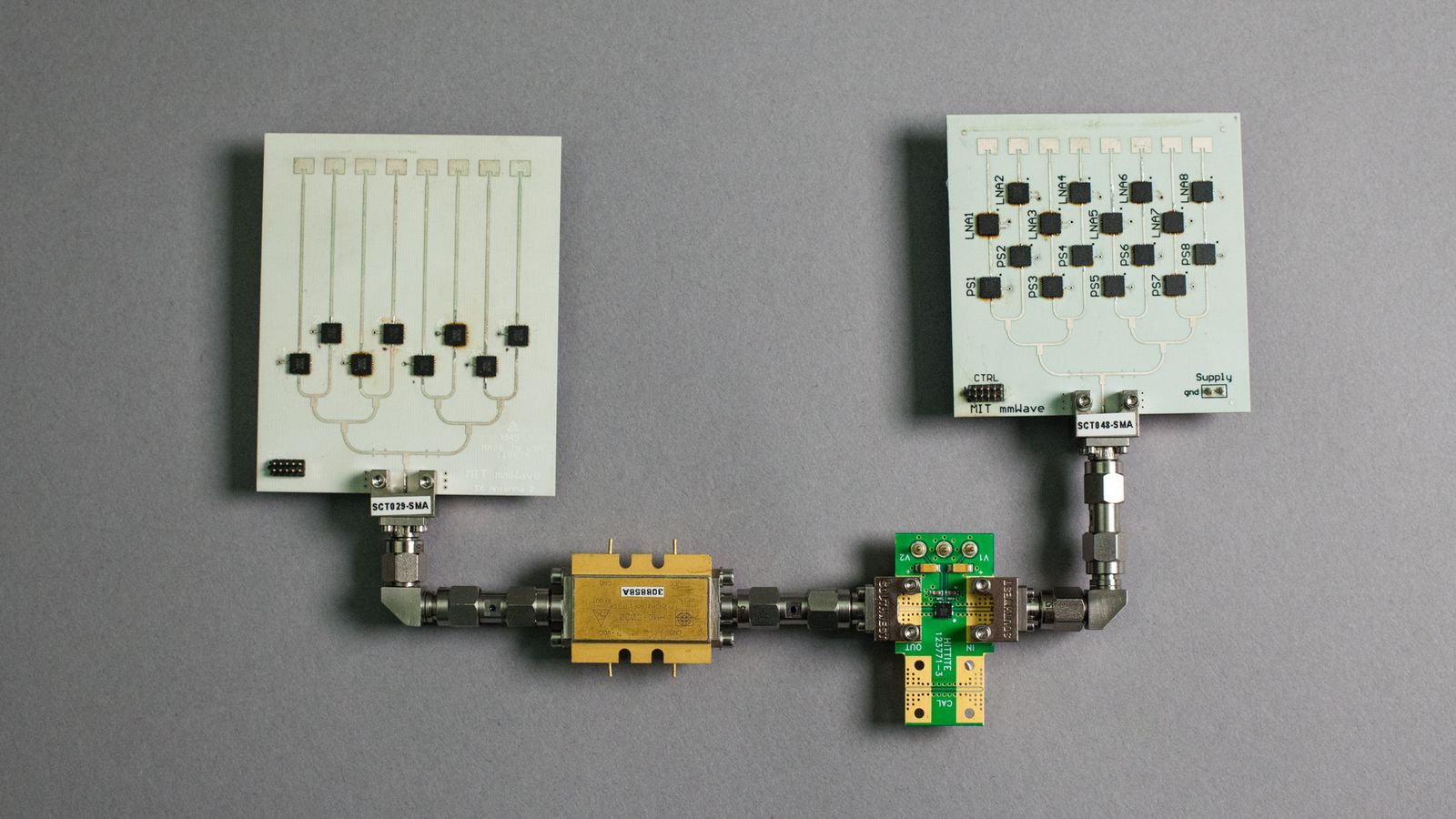

Yet the law has been fraying for years and experts predict that it will soon reach its limits. However, I spoke to Bernie Meyerson, IBM’s Chief Innovation Officer, and he feels strongly that the end of Moore’s Law doesn’t mean the end of progress. Not by a long shot. What we’ll see though is a shift in emphasis from the microchip to the system as a whole.

Continue reading “Moore’s Law Will Soon End, but Progress Doesn’t Have to” »